Alba Carballo González defenderá su tesis doctoral el próximo lunes 13 de enero

- La defensa tendrá lugar en la Sala Adoración de Miguel 1.2.C16 del edificio Betancourt ubicado en el Campus de Leganés de la Universidad Carlos III de Madrid, a las 11:00 am

Alba Carballo se licenció en matemáticas en la Universidad de Santiago de Compostela en 2012. Durante su licenciatura estudió en la Universidad de Southampton (Reino Unido) en el marco del Programa Erasmus. En 2015, obtuvo su Máster en Ingeniería Matemática en la Universidad Carlos III de Madrid y en septiembre del mismo año comenzó un doctorado en Ingeniería Matemática con la Estadística como principal área de estudio e investigación. Durante su doctorado, Alba realizó una estancia de investigación con el Prof. Göran Kauermann, Catedrático de Estadística de la Universidad Ludwig-Maximilians-Universität de Munich. Su tesis doctoral ha sido dirigida por el María Durbán de la Universidad de Carlos III y el investigador Dae-Jin Lee, lider de la línea de investigación en Estadística Aplicada del Basque Center for Applied Mathematics - BCAM. En nombre de todos los miembros del centro queremos desear mucha suerte a Alba en la defensa de su tesis. [idea] Title: A General Framework for Prediction in Generalized Additive Models Smoothing techniques have become one of the most popular modelling approaches in the unidimensional and multidimensional setting. However, out-of-sample prediction in the context of smoothing models is still an open problem that can significantly widen the use of these models in many areas of knowledge. The objective of this thesis is to propose a general framework for prediction in penalized regression, particularly in the P-splines context. To that end, Chapter 1 includes a review of the different proposals available in the literature, and results useful and necessary along the document, the formulation of a P-spline model and its reparameterization as a mixed model. In Chapter 2, we generalize the approach given by Currie et al. (2004) to predict with any regression basis and quadratic penalty. For the particular case of penalties based on differences between adjacent coefficients, we reparameterize the extended P-spline model as a mixed model and we prove that the fit remains the same as the result we obtain only fitting the data and show the crucial role of the penalty order, since it determines the shape of the prediction. Moreover, we adapt available methods in contexts such as mixed models (Gilmour et al. 2004) or global optimization (Sacks et al. 1989) to predict in the context of penalized regression and prove their equivalence for the particular case of P-splines. An extensive section of examples illustrates the application of the methodology. We use three real datasets with particular characteristics: one of them on aboveground biomass allow us to show that prediction can also be performed to the left of the data; other of them, on monthly sulphur dioxide levels, illustrates how prediction can take into account the temporal trends and seasonal effects by using the smooth modulation model based on P-splines suggested by Eilers et al. (2008); and other, on yearly sea level, shows that prediction can also be carried out in the case of correlated errors. We also introduce the concept of “memory of a P-spline” as a tool to know how much of the known information we use to predict new values. In the third chapter, we propose a general framework for prediction in multidimensional smoothing, we extend the proposal of Currie et al. (2004) to predict when more than one covariate is extended. The extension of the prediction method to the multidimensional case is not straightforward in the sense that, in this context, the fit changes when the fit and the prediction are carried out simultaneously. To overcome this problem we propose an easy but elegant solution, based on Lagrange multipliers. The first part of the chapter is dedicated to show how out-of-sample predictions can be carried out in the context of multidimensional P-splines and the properties satisfied, under certain conditions, by the coefficients that determine the prediction. We also propose the use of restrictions to maintain the fit, and in general, to incorporate any known information about the prediction. The second part of the chapter is dedicated to extend the methodology to the smooth mixed model framework. It is known that when a P-spline model is reparameterized as a mixed model, the structure of the coefficients is lost, that is, they are not ordered according to the position of the knots. This fact is not relevant when we fit the data, but if we predict and impose restrictions over the coefficients, we need to differentiate between the coefficients that determine the fit and the coefficients that determine the prediction. In order to do that, we define a particular transformation matrix that preserves the original model matrices. The prediction method and the use of restrictions is illustrated with one real data example on log mortality rates of US male population. We show how to solve the crossover problem of adjacent ages when mortality tables are forecasted and compare the results with the well-known method developed in Delwarde et al. (2007). The research in Chapter 4, is motivated by the need to extend the prediction methodology in the multidimensional case to more flexible models, the so-called Smooth-ANOVA models, which allow us to include interaction terms that can be decomposed as a sum of several smooth functions. The construction of these models through B-splines basis suffer from identifiability problems. There are several alternatives to solve this problem, here we follow Lee and Durbán (2011) and reparameterize them as mixed models. The first two sections of the chapter are dedicated to introduce the Smooth-ANOVA models and to show how out-of-sample prediction can be carried out in these models. We illustrate the prediction with Smooth-ANOVA models reanalyzing the dataset on aboveground biomass. Now, the Smooth-ANOVA model allows us to represent the smooth function as the sum of a smooth function for the height, a smooth function for the diameter of a tree, and a smooth term for the height-diameter interaction. At the end of this chapter, we provide a simulation study in order to evaluate the accuracy of the 2D interaction P-spline models and Smooth-ANOVA models, with and without imposing invariance of the fit. From the results of the simulation study, we conclude that in most situations the constrained S-ANOVA model behaves better in the fit and out-of-sample predictions, however, results depend on the simulation scenario and on the number of dimensions in which the prediction is carried out (one or both dimensions). In the fifth chapter we generalize the developed methodology for generalized linear models (GLMs) in the context of P-splines (P-GLMs) and mixed models (P-GLMMs). In both frameworks, the coefficients and parameters estimation procedures involve nonlinear equations. To solve them iterative algorithms based on the Newton-Raphson methods are used, regardless of the estimation criterion used (for instance, in the GLMMs context we can maximize the residual maximum likelihood (REML) or an approximate REML (based on Laplace approximation)). These iterative algorithms are based on a working normal theory model or a set of pseudodata and weights. Based on this idea, we ex- tend the Penalized Quasilikelihood method (PQL) to fit and predict simultaneously in the context of GLMM. We highlight that, in the context of mixed models (even in the univariate case), to maintain the fit a transformation that preserves the original model matrices has to be used, since different transformations deal with different working vec- tors and therefore with different solutions. We also show how restrictions can be imposed in P-GLMs and P-GLMMs models. To illustrate the procedures we use a real dataset to predict deaths due to respiratory disease through 2D interaction P-splines and S-ANOVA models (both with and without the restriction the fit has to be maintained). Finally, Chapter 6 is devoted to summarize the main conclusions and pose a list of future lines of work. [/idea]

Related news

Sobre el centro

ESGI 188 (European Study Group with Industry) tendrá lugar en Bilbao del 26 al 30 de mayo de 2025

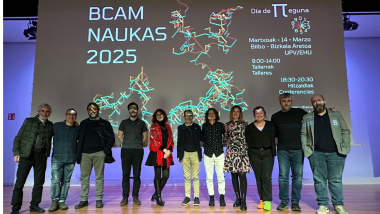

La gente del BCAM